Unfinished Trading Automation

This post was actually written about half a year ago, but it had been sitting in my loose leaf notes. Here it is published.

What I didn't do

I spent 90% of my time doing stuff that I ended up scrapping.

Products like Zapier are restrictive (no external libs, at least at the time of writing this) and also very expensive; the cost is on another order of magnitude.

I also could have set up automation with mac crons. However this is a hard restriction as my machine can't be shut down or sleeping.

There are out-of-the-box solutions like AI Platform Notebooks or Colab for writing and running code with live machines. Google also offers other managed products like Cloud Composer, not to mention the plethora of other non-Google solutions out there. These are great, I even tried a few of these out, but for one reason or the other (wrong use case, too many bells and whistles, expensive, restrictive, etc.), I decided to play with other toys.

I almost went with Papermill as writing code in Jupyter is easy and fast. Papermill gives .ipynb files a level of productionization by allowing parameterization and execution. You can run something like papermill gs://bucket-name/input.ipynb gs://bucket-name/output.ipynb -f parameters.yaml to run and store (integrations include Google Cloud Storage).

There's are also best practices that go with productionizing Jupyter (testbook for tests, nbdime for diffs, etc.). Jupyter is super easy to write in, and it supports many different programming languages. This is essentially the process that Netflix and Bilibili use (+ this talk and this post).

The tradeoffs for using Jupyter are speed, size (install notebook/kernel, papermill, apis), and money (VMs can be expensive). I created an MVP for this (pubsub -> papermill), that I, in the end, also ended up scrapping.

What I did (and/or plan on doing / could do)

I wrote a tiny CLI tool in rust with reqwest and clap that is basically a wrapper around Alpaca's library.

// abridged code

fn buy_asps(reqs: AlpacaEndpoint) {

let get_request = reqs.post_request("/orders", body);

}The idea is to buy ASPS (foreclosure/forbearance stuff) whenever it looked like people couldn't afford housing.

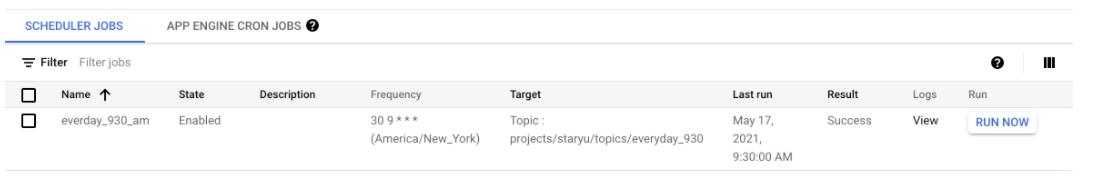

When we get an event from PubSub, we trigger this business logic with a command like cargo run -- --date 20210517 --function account --is_live. There is enough flexibility to run different functions on different dates, using a live account or a paper account, and even more, depending on the PubSub signal. The PubSub signal is created by Cloud Scheduler, which is basically a cron. All of this is controlled in GCP's UI. The binary gets run by Cloud Functions.

However, this kind of just teetered off slowly. In the future, I may use python or go to write rewrite this.

This project's costs less than a 30 cents a year. I'm just using a lambda function to make cheap calls, while most of these services are intended to be high volume or fast. Each time a binary is rebuilt, the artifact needs to be copied to a repo, and needs to be published, which is what the ci code does, reused from papermill mvp (above) earlier. Also applicable when automating other things; down to look at this at a later date.